Updated February 14, 2026 — The artificial intelligence that seamlessly drafts your emails, edits your videos, and powers your company’s logistics isn’t magic. It’s the product of a silent but monumental shift in computing hardware. Behind the curtain of every major AI breakthrough in 2026 lies a sprawling, power-hungry infrastructure of highly specialized processors and massive data centers. This is the world of AI Supercomputing & Specialized Chips: The Hidden Tech Fueling the Next AI Boom, and it’s fundamentally reshaping our digital world from the cloud to the phone in your hand.

While software has captured the headlines, the real story is in the silicon. The race to build more powerful and efficient AI is no longer just about clever algorithms; it’s a fierce competition to design and deploy the hardware that makes them possible. The very fabric of modern AI depends on it.

What Happened

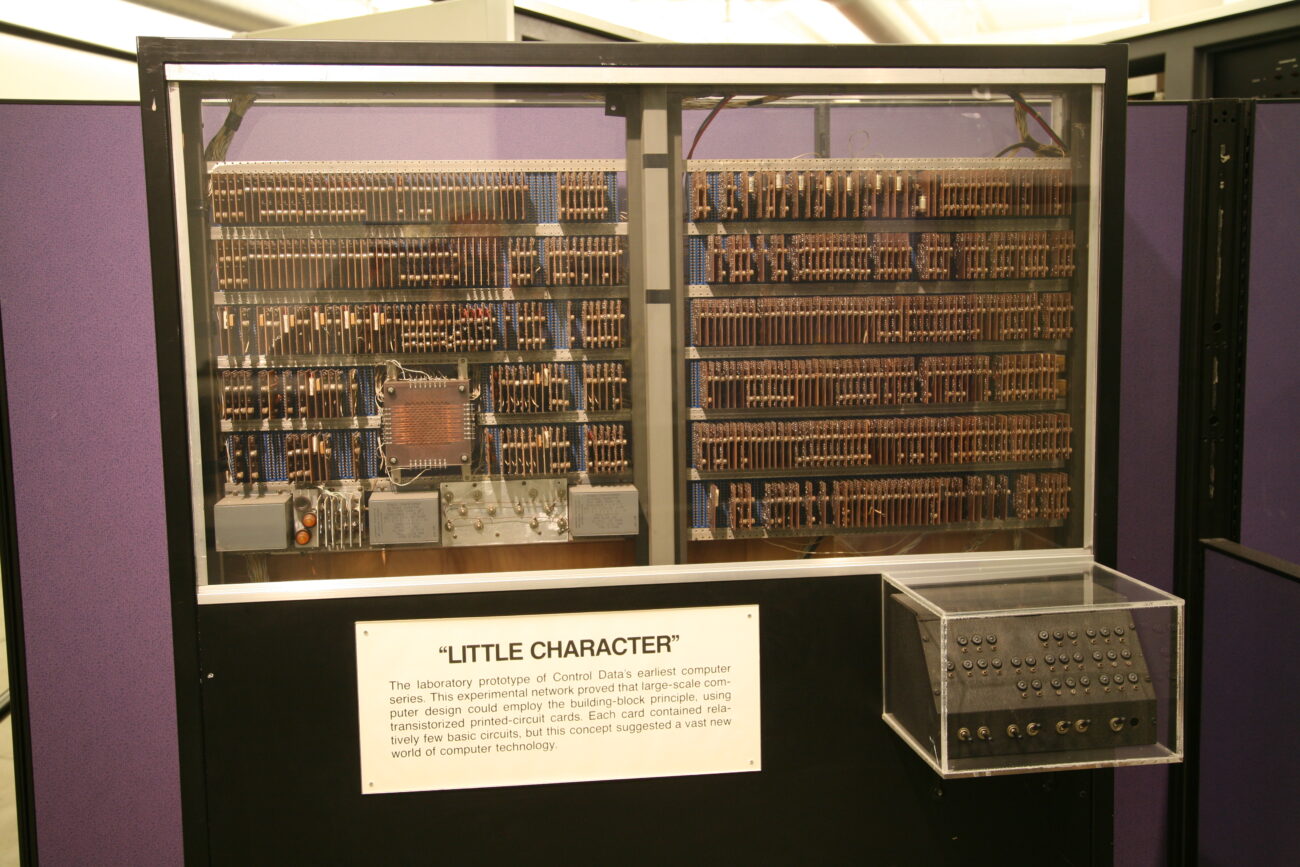

For decades, the Central Processing Unit (CPU) was the undisputed brain of any computer. It was a versatile generalist, capable of handling everything from spreadsheets to web browsing. But the explosive growth of artificial intelligence created a problem that CPUs couldn’t solve alone. Training a large AI model involves performing trillions of simple, parallel calculations—a task that bogs down a generalist CPU.

This created an opening for a new class of hardware. Initially, Graphics Processing Units (GPUs), designed for rendering video game graphics, proved exceptionally good at these parallel tasks. This discovery kicked off a hardware revolution. Today, in early 2026, the landscape has evolved far beyond repurposed gaming chips.

Tech giants now design their own custom-built silicon, known as Application-Specific Integrated Circuits (ASICs). Google’s Tensor Processing Units (TPUs) and Amazon’s Trainium and Inferentia chips are prime examples—processors engineered with one purpose: to run AI models with brutal efficiency. These chips are then networked by the thousands inside colossal data centers, forming AI supercomputers that function as modern-day “AI factories.” These systems are what allow companies to train the sophisticated generative video and scientific AI models that have become commonplace.

Why It Matters

This fundamental shift in hardware has profound implications for nearly every piece of technology we use. The demand for more intelligent, responsive, and predictive services has made these specialized systems a critical, non-negotiable layer of the global tech stack.

From the Cloud to Your Pocket

For large organizations, the AI arms race is being fought in the cloud. Companies like Microsoft Azure, Google Cloud, and AWS are investing billions to offer access to their massive AI supercomputing platforms. This allows businesses in finance, healthcare, and logistics to run complex enterprise analytics—like real-time fraud detection or drug discovery simulations—without the astronomical cost of building their own data centers. It’s a utility, like electricity, but for raw intelligence.

At the other end of the spectrum is “edge computing.” The smartphone in your pocket, the car you drive, and the smart speakers in your home now contain their own tiny, hyper-efficient AI chips, often called Neural Processing Units (NPUs). These chips allow AI tasks to happen directly on your device, without sending data to the cloud. This is why your phone can translate a conversation in real-time, enhance photos instantly, and offer predictive text that feels eerily accurate. It’s faster, more private, and doesn’t rely on a constant internet connection.

The Engine for Data-Heavy AI Services

The sophisticated AI services we now take for granted are simply not possible without this specialized hardware. The AI models behind them are growing in complexity at an exponential rate, demanding ever-increasing computational power. A standard data center from 2020 would be completely overwhelmed by the demands of a single, cutting-edge 2026 AI model.

Consider the data-heavy services that define our digital lives:

- Generative AI: Tools that create realistic video from a text prompt or compose original music require training on immense datasets, a process that can take weeks even on a supercomputer equipped with tens of thousands of specialized chips.

- Hyper-Personalization: Recommendation engines on streaming services and e-commerce sites analyze the behavior of millions of users simultaneously to deliver uniquely tailored suggestions. This continuous learning process is powered by dedicated AI hardware.

- Scientific Research: Fields like climate modeling, genomics, and materials science rely on AI supercomputers to sift through petabytes of data, accelerating discoveries that would have once taken decades.

Without this hardware foundation, innovation in AI would grind to a halt. The chips are the engine, and the data is the fuel.

What to Watch Next

As we move through 2026, the evolution of AI hardware is accelerating, bringing both immense opportunities and significant challenges. The decisions made in design labs and boardrooms today will dictate the trajectory of AI for the next decade.

The Energy Equation

A critical challenge is the staggering energy consumption of AI supercomputers. Some large-scale training runs consume as much electricity as a small city, raising serious environmental and cost concerns. The next frontier in chip design isn’t just about raw power; it’s about “performance per watt.” Expect to see major breakthroughs in energy-efficient chip architectures and innovative cooling solutions for data centers becoming a top priority.

The Next Wave of Chips and Geopolitics

The innovation pipeline is already focused on what comes after GPUs and TPUs. Researchers are actively developing neuromorphic chips that mimic the structure of the human brain and exploring optical computing, which uses light instead of electricity for calculations. These technologies promise to be orders of magnitude faster and more efficient.

At the same time, the global supply chain for these advanced semiconductors has become a major geopolitical flashpoint. A nation’s access to cutting-edge chip manufacturing is now directly tied to its economic competitiveness and national security. Watch for continued government investment and trade policies aimed at securing domestic semiconductor production.

The invisible world of silicon is where the future of artificial intelligence is being built. The chips may be small, but the impact of this hardware revolution is anything but.

Ava Brix is an accomplished author dedicated to crafting engaging narratives across various genres. With a passion for storytelling and a commitment to literary excellence, she strives to connect with readers on a profound level. Ava believes in the power of stories to illuminate the human experience and inspire positive change.